From Jar to native cross-platform Java with pipeline

Distribution of Java programs was never its strength. But new technologies in the Java ecosystem brought more possibilities than just a simple jar. Here, I will share how I built a pipeline to automate the distribution of my project.

Motivation

When I began developing my app, I did so with the “next generation” JavaFX. In retrospect, it was the correct choice, if only for the lessons learned. I will describe the old distribution solution to give you a sense of how things have improved since.

Java code is compiled and packaged into a jar that can be simply distributed for systems with a JRE. That was my hopeful reasoning. JRE is no longer provided and JavaFX is not a part of the JDK since version 11. And the dependencies were not present in the jar I was trying to export. Some of these issues were solved by specifying manifests, source directories and other uninteresting bits. I learned that what I needed was a fat / shaded / uber jar. A fat jar means including the app’s dependencies in the jar. Newer solutions like executable Spring jar are essentially that.

In any case, JavaFX requires a JDK. Furthermore, I wanted to distribute an exe file. Converting jar to an exe is no easy feat. Launch4j is a tool made for this task. My attempts to create exe up to spec failed and I ended up with a hardcoded JDK path. For convenience, I made an installer in InnoSetup. Creating installers, step by step, and testing them consumes a lot of time.

Furthermore, it soon appeared that my installer’s JDK path picker did nothing at all, and the exe searched exclusively at C:/Program Files/Java/jdk-20. It was at this time that I had a better overview and also reached the limits of JavaFX. JavaFX does not have superior performance, obvious cross-platform distribution and especially not good GUI experience. It is relatively easy to hack together, with the UI code in an adjacent Java class. RAM consumption was ~100 MB, even after heap size adjustment in Launch4j. The baggage of JDK was also something I wanted to avoid.

Tech stack

Replacing the JavaFX GUI meant stepping outside the Java ecosystem and turning to a JavaScript frontend framework. Developing a web interface with these tools comes with many benefits. I decided to use Vue.js with Vite for the frontend, paired with Spring Boot for the backend.

With this setup, frontend and backend run on separate local servers. For this post I will use Spring Tomcat server’s default port 8080. Likewise, Vite serves the Vue.js files at 5173.

Switching from JavaFX to Vue.js also allowed me to drop the need for the JDK. Vite handles building optimized static files for the frontend, which are then placed in the dist/ directory. These files exported to dist/ are moved over to src/main/resources/static so that the backend server can serve them at 8080.

GraalVM

I also came across GraalVM. It is a distinct JDK with ahead-of-time compilation. For my use case, it can compile code to a native executable (exe on Windows). The compilation time is longer but the result is a program with no warmup time. A Graal-generated executable starts instantly. It’s not the intended use case, but it works for native compilation.

Automating the manual process

I pivoted to these technologies and even made a new NSIS installer. The state of my app was better than before, but the manual steps of distribution were tedious:

- Build static frontend files.

- Move these files to /resources/static.

- Start native compilation.

- Use external tool to embed an icon.

- Move the executable to a special folder.

- Update the installer script.

- Start the installer packaging script.

- Go back to build the bootJar for other platforms.

- Finally, upload and make a release.

It goes without saying that a human is inefficient and unreliable at doing repeated tasks. Any mistake meant that I had to do the steps over. A lot of time was wasted. Automated pipelines come to the rescue. Over time, I automated most of the steps so that my input is reduced to more or less one click.

A good first step is to set the output dir in vite.config.js or equivalent config. I pointed it to the backend location so that I don’t have to copy the static files manually.

export default defineConfig({

plugins: [vue()],

build: {

outDir: "../src/main/resources/static",

},

Electron

My app previously used the browser for rendering its UI (opened a browser tab at localhost). While a valid offloading approach, it creates more friction for users. I used the opportunity and integrated Electron. Electron-related tools bring even more convenient functions for distribution.

Electron requires some new files and package.json additions. First, the electron-builder I use, and its alternative electron forge, need the following in package.json:

"author": "BLCK",

"name": "mrt",

"version": "9.1.0",

"description": "MusicReleaseTracker",

"main": "electron-main.js",

Name can’t contain capital letters. Version has to be in the semantic versioning format. main points to the entry point of electron. I recommend giving it an obvious name, so it cannot get confused with other main.js, index.js and config.js files.

This is the script section. To run electron for development, I type npm run electron. The script distExe packages an executable and distInstaller goes further to create an installer:

"scripts": {

"dev": "vite",

"buildVue": "vite build",

"preview": "vite preview",

"electron": "electron .",

"distExe": "electron-builder --dir",

"distInstaller": "electron-builder"

},

The pipe

The pipeline is made in GitHub actions.

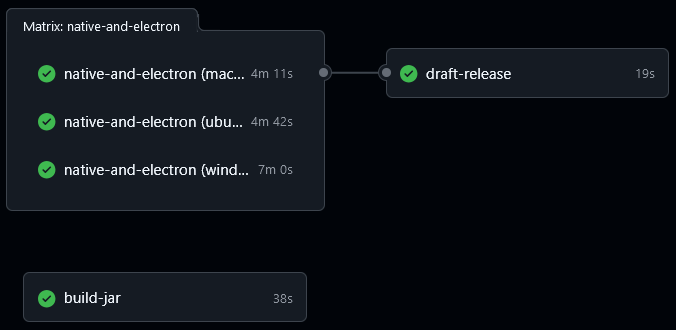

We want to create some reference for testing because native compilation is expensive. So, optionally only the jar can be built. Native executables are built for the three operating systems, and only after all builds pass, a release is drafted:

name: distribution

on:

workflow_dispatch:

inputs:

make-native:

description: "Build native executables and package with electron-builder? yes/no"

required: true

default: "yes"

make-draft:

description: "Make draft release? yes/no"

required: true

default: "yes"

The workflow is run manually. Running GraalVM with every pull request makes no sense. Java is specified only once as an environment variable. Other tool’s versions are set to latest.

env:

JAVA_VERSION: "22"

The first job build-jar sets up the JDK and Gradle. A bootJar is built, then uploaded with the upload-artifact action. The last line specifies the jar path on the runner. Notice the presence of wildcard - because the output file contains version. Uploading means uploading an artifact, which is stored temporarily and is available for other jobs as well as for download.

Artifacts are accessible only in the scope of a workflow.

build-jar:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Setup JDK

uses: actions/setup-java@v4

with:

java-version: ${{ env.JAVA_VERSION }}

distribution: "temurin"

- name: Setup Gradle

uses: gradle/actions/setup-gradle@v4

with:

gradle-version: current

- name: Grant execute permission for gradlew

run: chmod +x ./gradlew

- name: Build bootJar

run: ./gradlew bootJar

- name: Upload jar

uses: actions/upload-artifact@v4

with:

name: MRT.jar

path: build/libs/MRT-*.jar

For upload-artifact, the wildcard rules are:

| build/libs/ | entire directory |

| build/**/MRT.jar | recursively matches subdirectories |

| build/libs/MRT-*.jar | matches characters until a dot |

| build/libs/M?T.jar | matches any character |

In the next job, the native build is invoked. Cross-compilation is not really possible. GitHub-hosted runners are great for this purpose. A matrix acts like a loop. It repeats actions or runs them parallel with different inputs.

native-and-electron:

runs-on: ${{ matrix.os }}

strategy:

fail-fast: true

matrix:

os: [ubuntu-latest, windows-latest, macos-latest]

make-native: [yes]

steps:

GraalVM JDK setup is handled by setup-graalvm.

- uses: actions/checkout@v4

- name: Setup GraalVM

uses: graalvm/setup-graalvm@v1

with:

java-version: ${{ env.JAVA_VERSION }}

github-token: ${{ secrets.GITHUB_TOKEN }}

- name: Setup Node.js and npm

uses: actions/setup-node@v4

with:

node-version: 22

cache: "npm"

cache-dependency-path: "vue/package-lock.json"

- name: Install npm dependencies

run: |

cd vue

npm ci

The nativeCompile command initiates the GraalVM compilation. Generated executable’s extension is .exe on Windows. On Linux and MacOS there is no extension. The first wildcard in the following code is for version, the second is for the extension. The executable is generated in build/native/nativeCompile.

Native image extension on Windows is .exe while Linux and MacOS have none.

- name: Build native executable

run: |

chmod +x ./gradlew

./gradlew nativeCompile

- name: Move native executable

run: mv build/native/nativeCompile/MusicReleaseTracker* vue/buildResources/

- name: Electron builder

run: |

cd vue

npm run distInstaller

- name: Upload installer

uses: actions/upload-artifact@v4

with:

name: ${{ matrix.os }}

path: vue/distribution/MRT-*.*

if-no-files-found: error

With all the files uploaded, the names being the names of runners like ubuntu-latest, an optional release can be made. needs means the job can run only after the matrix has completed.

draft-release:

needs: native-and-electron

runs-on: ubuntu-latest

if: ${{ inputs.make-draft == 'yes' }}

steps:

Jobs don’t share information directly. The artifacts are downloaded with the action download-artifact. To avoid issues, use the upload and download actions of the same version. All our artifact names have -latest in common. merge-multiple downloads them on the runner in a single archive. The action-gh-release action is configured to take all contents of downloaded and to create a draft release.

- uses: actions/checkout@v4

- name: Download candidate artifacts

uses: actions/download-artifact@v4

with:

path: downloaded

pattern: "*-latest"

merge-multiple: true

- name: Release

uses: softprops/action-gh-release@v2

with:

files: downloaded/**

name: Draft release

draft: true

Confused about artifact names? File is uploaded with an artifact name. The web interface allows manual download, which yields the file in a ZIP. The download-artifact downloads and automatically unzips the file on the runner. No wildcards are thus needed to preserve the file name.

GitHub artifact is stored as a ZIP. After uploading and downloading a file with artifact actions, you are left with the same file with its original name.

This is the end of the pipeline. Be aware, however, that I omitted some lines. I will get to them now.

Native executable from jar rather than from source

The initial intent was to build the bootJar and to upload it as an artifact. The native-and-electron job could download it and build native from a JAR instead of compiling from source. If you try this, you will be met with:

Error: Main entry point class 'com.x.Main' neither found on classpath:

'/build/libs/MRT.jar' nor modulepath: '/graalvm-jdk/lib/svm/library-support.jar'.

In a Spring executable jar, the classpath is:

MRT.jar

├── META-INF

├── org

└── BOOT-INF

└── classes

└── com.blck

└──MRT

└──Main.class

Whereas GraalVM searches the entry class at com.blck.MRT.Main.class. The issue is the extended structure of this jar. You could try a custom shaded jar or follow the docs. AOT is another concern. I deem source compilation much simpler.

Electron-builder config

Electron-builder can be configured in package.json. Everything is specified in build. The options can be found in the docs. The following excerpt shows how to set an output file name. The ${version} is taken from the version higher up. The .${ext} means that the extension will match the original file’s extension. I named the /buildResources folder, where additional build files and scripts exist, well, buildResources. output, that means the temporary build files and the installer, is set to the folder /distribution. Each platform has its tag where one can apply any options from the docs. Windows installers can be NSIS or MSI. And it has icon embedding! Another manual step eliminated.

"build": {

"directories": {

"output": "distribution",

"buildResources": "buildResources"

},

"win": {

"artifactName": "MRT-${version}-win.${ext}",

"extraFiles": [

{

"from": "buildResources/MusicReleaseTracker.exe",

"to": "buildResources/MusicReleaseTracker.exe"

}

],

"target": [ "msi" ],

"icon": "buildResources/MRTicon.ico"

},

"msi": { ... },

"linux": { ... },

"mac": { ... }

},

Path strategy

This is the project’s file structure.

root

├── src/main/resources/static

├── build

│ ├── libs

│ └── native/nativeCompile

└── vue

├── package.json

├── electron-main.js

├── buildResources

├── distribution

└── src

The electron-main.js manages the lifecycle of the native executable. Where to put the executable? I could leave it in nativeCompile and point to it.

app.whenReady().then(() => {

externalEXE = spawn("../build/native/nativeCompile/fileName", {

This works fine and doesn’t require any moving. I could also point the electron-builder config there. The distribution will not work. In the electron-builder config you may have noticed:

"extraFiles": [

{

"from": "buildResources/MusicReleaseTracker.exe",

"to": "buildResources/MusicReleaseTracker.exe"

}

],

This specifies that the file at from location will be copied and located at the to location in distribution. The escaping ../ in ../build/native/nativeCompile/fileName will cause toubles. I’m aware there are more ways to go around it. I move the executable to buildResources and then mirror the structure. Mirroring is so that I don’t have to maintain 2 paths in electron-main.js.

app.whenReady().then(() => {

externalEXE = spawn("buildResources/fileName", {

Notably, the fileName’s extension there does not need to be specified. To sum up, backend file is moved to buildResources, where I already have icons and other resources for electron-builder. Built installers are located in distribution. Once all installers are ready, a release is drafted. The bootJar from the first action is located in build/libs.

Point electron to port or index

There is the option of electron serving the static frontend files itself and the option of connecting to a port, like a browser previously.

function createWindow() {

win.loadFile("dist/index.html");

win.loadURL("http://localhost:57782");

I don’t see much difference here. I tried the former but couldn’t get it to work properly. Surprising no one, the backend has to be running before loadUrl is called.

Possible race condition

Backend will always need some time to initialise. Creating an electron window that calls the backend immediately can cause a race condition. Therefore, we should ensure that calls can be sent only once the backend is prepared:

await checkBackendReady();

createWindow();

In my project, I set up simple polling of an endpoint checkBackendReady. It’s as good a solution as it gets with a REST controller.

CORS policy

It’s inevitable that we run into an issue of a missing CORS (Cross-site resource sharing) security policy. This measure prevents access to resources from a different port, like an axios request from 5173 to 8080. If you encounter this issue, set up a Spring CORS proxy that reroutes the requests to the origin port. The most basic setup:

@Bean

public WebMvcConfigurer corsConfigurer() {

return new WebMvcConfigurer() {

@Override

public void addCorsMappings(CorsRegistry registry) {

registry.addMapping("/api/**").allowedOrigins("http://localhost:5173");

}

};

}

GraalVM AOT

So far, I omitted steps handling GraalVM AOT (ahead of time) compilation.

The Native Image tool relies on static analysis of an application’s reachable code at runtime. However, the analysis cannot always completely predict all usages of the Java Native Interface (JNI), Java Reflection, Dynamic Proxy objects, or class path resources.

External resources can cause the compiled program to break. You may encounter an error like Uncaught (in promise) TypeError: thing is undefined. With external resources, you should provide tracing information to the AOT compiler.

Placing frontend files outside the Spring’s resources

This section was added long after the original post because I found a better way to avoid Graal affecting JS files. I still left the old section there, but you can safely skip to “Wrapping up”. The simple solution is to do native compile, then build the frontend and move the static files to the same dir as the executable. This requires configuration in Spring as well as Electron/executable path handling. Please refer to the source code to find the working implementation.

The unreliable way

The information is generated by tracing agent. What worked for me is running the bootJar with Gradle argument:

bootRun {

jvmArgs("-agentlib:native-image-agent=config-output-dir=tracing")

}

This generates the information in /tracing at root. You should verify the files to make sure they cover the problematic paths. The config files concern reflection, serialisation, JNI and more. In resource-config.json the frontend file was captured:

"pattern":"\\QMETA-INF/resources/assets/index-CkTRZNgD.js\\E"

GraalVM will register the config files at build/resources/aot/META-INF/native-image. You can verify it additionally by compiling with -H:Log=registerResource:3.

Unfortunately, Vite appends hash to static file names. The names will change eventually. I made use of regular expressions and it was effective temporarily.

"pattern":"\\QMETA-INF/resources/assets/index-.*\\.js\\E"

You can put the files in the Git /build directory tree and compile only to see the tracing information disappear. I didn’t verify this but I suspect that missing build/resources/main/ triggers file reset before jar build or native compile. Since it contains frontend assets that shouldn’t be hardcoded, on the runner I first build a bootJar to generate the file structure and then copy the tracing information.

- name: Build bootJar to create file structure

run: |

chmod +x ./gradlew

./gradlew bootJar

- name: Copy AOT tracing info

run: cp vue/buildResources/graal-tracing/* build/resources/aot/META-INF/native-image/

Wrapping up

You can view the pipeline’s source code snapshot here.

As a result, I only have to launch the workflow and return later for a release confirmation. Virtual runners enable distribution for all platforms. JavaFX is no match for this setup. Interestingly, startup time despite the Electron window is roughly 0.5 s.

Last thing to improve is the development experience. To see a change in Vue.js in Electron window, GraalVM would have to compile a new executable. Electron is listening on the backend port - by using a dev environment variable to disable the native executable, Electron will connect to a local dev server:

app.whenReady().then(() => {

if (process.env.NODE_ENV !== "development") {

externalEXE = spawn("buildResources/MusicReleaseTracker", {